Setting up Docker Swarm and using GitLab CI/CD to do stack deployments

There are several ways to set up a Docker Swarm host and there are many tutorials about these methods. But some tutorials do not give the complete idea about how to move forward with the initial setup.

If you are using Gitlab CI/CD pipelines, one of the questions you might ask after setting up a swarm is how to run docker stack deploy command for a remote Docker host on a Gitlab runner. Before trying to answer this simple question, I would like to point some facts about Docker Engine.

Docker Engine API

Docker Engine has an RESTful API. You can find the full API documentation here : https://docs.docker.com/engine/api/v1.40/ This means as Docker Engine has an RESTful HTTP/S API, we can connect to a remote Docker Engine and run commands remotely by even using curl or wget commands like we can do for any other HTTP/S based API.

In addition to that, docker CLI command accepts -H (host) parameter similar to mysql or redis commands. So, we can run docker CLI commands remotely by using this feature. Docker CLI commands are more easy to use and more familiar compared to directly using or implementing HTTP/S API, so it might be preferred way to use it in any CI/CD pipeline.

Making Docker Engine API securely, publicly accessible for remote connections

When we install Docker Engine to a host machine, by default, Docker Engine API is only available through a unix domain socket created at /var/run/docker.sock. Which means that we can connect to Docker Engine API only locally from the host. In order to enable remote connections, we must enable tcp socket. But there is a problem about it, when the default tcp socket at port 2375 is enabled, it provides "un-encrypted and un-authenticated direct access to the Docker daemon" according to the documentation : https://docs.docker.com/engine/reference/commandline/dockerd/#daemon-socket-option

As the documentation states, we should either configure an encrypted and authenticated TCP socket or we have to set up or use a secure proxy in front of our Docker host. The proxy option is outside the scope of this post, so I will try to explain how to use secure TCP socket to access Docker Engine API remotely.

First of all, we have to create TLS keys and certificates and configure the Linux init scripts or configurations to tell Docker Engine to use these keys while providing a secure connection on port TCP/2376. You can find the tutorial about creating TLS keys in order to secure Docker Engine API here : https://docs.docker.com/engine/security/https/

If we follow the tutorial above, at the end, we will have a few TLS keys and certificate files that can encrypt and protect client-server communication while accessing a remote Docker Engine API (Docker daemon). The beauty of this set up is without client keys/certificates, nobody can access to the Docker Engine API. So, security can be achieved.

The important thing to keep in mind is we need those TLS keys/certificates to securely run Docker commands on a remote host in a Gitlab runner or anywhere else. And we have to configure the Docker Engine to use these keys/certificates to provide secure and authenticated remote service.

At this point we have two options :

- We can manually generate TLS keys/certificates and configure the Docker host to use them. After generating TLS keys once, we can use the same keys/certificates to add more Docker hosts in our infrastructure.

- We can use another tool called Docker Machine to automate this process.

Docker Machine

Docker Machine is a CLI tool that enables installing Docker Engine automatically on hosts. It can also be used for provisioning virtual servers (VPS) from VPS or Cloud Providers like AWS, Microsoft Azure, Linode, etc. So it basically can :

- create/provision a virtual server based on

--driveroption. - install Docker Engine on a given host (VPS or bare-metal).

- generate required TLS keys and do the necessary configuration automatically for having a secure remote access to the Docker Engine API.

Docker Machine tool can automatically do all the hard work for making a remote host ready to securely accept requests from any client, if the client uses the required client-side TLS keys and certificates. After that, we can use docker stack deploy commands to automatically deploy our docker-compose files to our Docker Swarm host.

But please keep in mind that "Docker Machine" is a tool should be used on the client side for provisioning Docker hosts. That means we don't need to install it on our Docker Swarm servers. It just automates what we can manually do.

In order to use Docker Machine commands on your local computer, you have to install it separately as explained here : https://docs.docker.com/machine/install-machine/

Setting up Docker Swarm

Installing Docker Engine automatically on the remote host and enabling secure Docker Engine API.

After installing Docker Machine, you can use the docker-machine CLI command on your computer.

For the purpose of this example, let's assume that we have a already provisioned VPS server from any provider and set up the SSH server to accept our SSH key ~/.ssh/id_rsa for the root user.

You should not usedocker-machine createcommand below on a host that already has a Docker Engine running containers. Because Docker Machine generic driver causes all running containers to stop because restarting Docker daemon is a part of the process. In addition to this, the command might overwrite existing TLS certificates under/etc/docker/which might cause some other problems.

In order to set up Docker Engine on the remote host, we can run :

docker-machine create --driver generic --generic-ip-address=<PUBLIC_IP_ADDRESS> --generic-ssh-user=root --generic-ssh-key=$HOME/.ssh/id_rsa myswarmLet's take a look at parameters we specified for the command :

--driver generic : By using this parameter, we specify that we want to set up the Docker Engine for an SSH enabled virtual or physical host. If you want to automatically provision virtual machines or instances from cloud providers you can check the list of available driver options : https://docs.docker.com/machine/drivers/

--generic-ip-address : We are giving the public IP address of our remote host

--generic-ssh-user : SSH user which is used for connecting the remote SSH server. It may be skipped if the user is root

--generic-ssh-key : the key that is used for SSH authentication. If skipped, the default key is used.

myswarm : This is the hostname we specify, which will be used both on the server and locally as machine name. docker-machine --driver generic changes server's host name based on this given name.

When this command is run, the output of the command should be similar to this :

Creating CA: /home/user/.docker/machine/certs/ca.pem

Creating client certificate: /home/user/.docker/machine/certs/cert.pem

Running pre-create checks...

Creating machine...

(myswarm) Importing SSH key...

Waiting for machine to be running, this may take a few minutes...

Detecting operating system of created instance...

Waiting for SSH to be available...

Detecting the provisioner...

Provisioning with debian...

Copying certs to the local machine directory...

Copying certs to the remote machine...

Setting Docker configuration on the remote daemon...

Checking connection to Docker...

Docker is up and running!

To see how to connect your Docker Client to the Docker Engine running on this virtual machine, run: docker-machine env myswarmPlease take a look to the first two lines :

Creating CA: /home/user/.docker/machine/certs/ca.pem

Creating client certificate: /home/user/.docker/machine/certs/cert.pemWe see here that docker-machine generates required client TLS keys and certificates under ~/.docker/machine/certs/ directory. That's great because we can grap those keys/certificates to use for connecting to the Docker host.

After docker-machine create command runs successfully, it means that the Docker Engine API on our Docker host can be accessed over the IP address we specified at port 2376 (which is the default secure TCP port for Docker Engine).

Setting up Docker Swarm

In order to initiate Docker Swarm mode, we have to login to the Docker host via SSH and run docker swarm init command. At this point, we can use either docker-machine ssh or direct ssh command to access to the host over SSH.

docker-machine tool provides an easy way to connecting to the remote host over SSH.

docker-machine ssh myswarmAfter accessing the server, we must initiate Swarm mode by using the following command :

docker swarm init --advertise-addr <MANAGER-IP>you must replace <MANAGER-IP> with the IP address of your host.

If the command is successful, the output will be similar to this one :

Swarm initialized: current node (uz6y79ahm4d1l) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3asa2ztaz1em5abru5oxh2owix8ranbois2n5ho3y5u 192.168.0.100:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

Please note that the port 2377 is swarm cluster communication port, not Docker Engine API port. You can't access Docker Engine API by trying to connect to the port 2377. That port is used by Docker Swarm itself.

You can use the given docker swarm join command in other hosts (without using docker swarm init) to make them join the Docker Swarm cluster as worker nodes.

If we want to add another worker node to our Swarm cluster, first we must set up another server by using docker-machine create command. If you use --driver generic option to set up another host, it will automatically use the same TLS keys/certificates to configure the Docker Engine. So, it means once TLS keys/certificate files are created under ~/.docker/machine/certs folder, docker-machine create always use the same TLS files to set up Docker hosts if the generic driver is used. For other driver options, you should check their documentation for this use case.

At this point, we know where our TLS client keys/certificates are located on our local computer and we also know that we can use those files to remotely run Docker commands on a Docker host.

Using Docker CLI commands on remote host

By using Docker Machine CLI command

You can use Docker Machine CLI to help you to set up local environment to run Docker commands on the remote host :

eval $(docker-machine env myswarm)When you run this command, it sets environment variables on your local computer so that any docker CLI command runs on the remote host.

Please note that after using the command above, almost alldockercommands will be run on the remote host named asmyswarm. To cancel this behavior, you can easily close your terminal window and start a new one so all temporary environment variables can be removed. Or you can run the following command to unset environment variables :eval $(docker-machine env -u)

You can always check which remote host is active by runningdocker-machine ls

command. If you see an asterisk (*) under the columnACTIVEit means that when you rundockercommands on your terminal, it will execute those commands on that remote host marked with asterisk (*).

By using directly docker command

At the beginning of this post, I mentioned that docker command takes -H (host) parameter. Now, we are ready to use that parameter. We know that all the required key/certificate files are under ~/.docker/machine/certs/ folder. If we provide required certificate files to docker command as well as correct host parameter, we can run Docker CLI commands on the remote host like this :

docker --tlsverify --tlscacert=$HOME/.docker/machine/certs/ca.pem --tlscert=$HOME/.docker/machine/certs/cert.pem --tlskey=$HOME/.docker/machine/certs/key.pem -H=<HOST-IP-ADDRESS>:2376 infoYou must replace <HOST-IP-ADDRESS> with your Docker Engine host's IP address.

I know this command is very long and is not suitable for practical use. It's better to use docker-machine approach for accessing remote Docker Engine API in your local computer. But if you like, you can create a bash alias and add it to your .bashrc file as :

alias docker_myswarm='docker --tlsverify --tlscacert=$HOME/.docker/machine/certs/ca.pem --tlscert=$HOME/.docker/machine/certs/cert.pem --tlskey=$HOME/.docker/machine/certs/key.pem -H=<HOST-IP-ADDRESS>:2376';You must replace <HOST-IP-ADDRESS> with your Docker Engine host's IP address.

Then you can use it like :

docker_myswarm stack deploy -c docker-compose.yml mystackIn fact, docker command checks following environment variables while running :

DOCKER_TLS_VERIFY : if its value is 1 it does the same thing with --tlsverify parameterDOCKER_HOST : it does the same thing with -H optionDOCKER_CERT_PATH : instead of specifying each TLS certificate and key with separate parameters, you can point a folder which keeps all the required keys and certificates like DOCKER_CERT_PATH=$HOME/.docker/machine/certs

So a bash alias can also be written as :

alias docker_myswarm='DOCKER_TLS_VERIFY="1"; DOCKER_HOST="tcp://<HOST-IP-ADDRESS>:2376"; DOCKER_CERT_PATH="$HOME/.docker/machine/certs"; docker';You must replace <HOST-IP-ADDRESS> with your Docker Engine host's IP address.

When you run eval $(docker-machine env myswarm) command, you are actually setting the same environment variables on your shell automatically. You can verify this by checking the output of docker-machine env myswarm command. Probably for DOCKER_CERT_PATH it will point another directory which is specific to myswarm host. But remember that initially those files are copied from ~/.docker/machine/certs by docker-machine create command.

While specifying your home directory in Docker related commands,$HOME/should be used instead of~/because the latter might not work.

By using simple curl command

At the beginning of this post, I claimed that we can connect directly to Docker Engine API via curl because it is just an HTTP/S API. After securing Docker Engine API by using TLS keys and certificates, we can really use curl or wget in order to make requests to our Docker Engine API.

In order to use curl we must use --proxy-... parameters with our TLS keys/certificates to pass the authentication step.

# getting "docker info" data by using curl

curl --proxy-cacert $HOME/.docker/machine/certs/ca.pem --proxy-cert $HOME/.docker/machine/certs/cert.pem --proxy-key $HOME/.docker/machine/certs/key.pem -x https://<HOST-IP-ADDRESS>:2376 http://v1.40/infoYou must replace <HOST-IP-ADDRESS> with your Docker Engine host's IP address.

The response of this command will be a JSON string which contains the same data which we could get by running docker info on the remote host.

While making requests to the Docker Engine API as an endpoint URL we use something likehttp://v1.40/.v1.40is the version of the Docker Engine API and we can use any supported API version on the same host. For example, instead of v1.40, we can use v1.24 like thishttp://v1.24/.

Setting up Gitlab CI/CD

Finally, we know all the details about how to run stack deploy commands remotely on our Docker Swarm Manager to automatically deploy our docker-compose files to a specified stack.

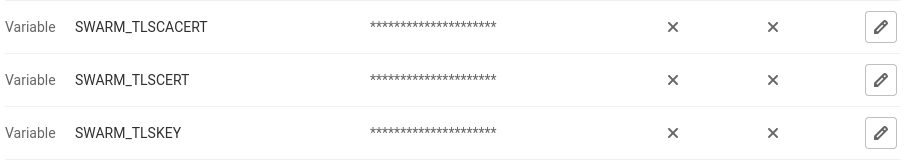

The first step is adding the contents of TLS keys/certificates as Gitlab CI/CD environment variables. You can define these variables on a repository or a group level.

The naming is depends on you of course. But based on these variable names :

SWARM_TLSCACERT : must have the file content of ~/.docker/machine/certs/ca.pem : must have the file content of

SWARM_TLSCERT~/.docker/machine/certs/cert.pem : must have the file content of

SWARM_TLSKEY~/.docker/machine/certs/key.pem

After setting these variables at Settings -> CI / CD -> Variables section, we can write a sample .gitlab-ci.yml file as :

stages:

- deploy

deploy-prod:

stage: deploy

image: docker:latest

variables:

DOCKER_HOST: tcp://<SWARM-MANAGER-IP>:2376

DOCKER_TLS_VERIFY: 1

script:

- mkdir -p ~/.docker

- echo "$SWARM_TLSCACERT" > ~/.docker/ca.pem

- echo "$SWARM_TLSCERT" > ~/.docker/cert.pem

- echo "$SWARM_TLSKEY" > ~/.docker/key.pem

- docker stack deploy -c docker-compose.yml myprojectdeploying docker-compose file to a swarm stack called "myproject"

You can also think of alternative ways to write this .gitlab-ci.yml file. For example, instead of using text-based environment variables, you can use environment variables with type "file" and change the script accordingly.

Another thing you might notice is I did not set and use DOCKER_CERT_PATH environment variable or provide --tls... parameters to the docker command. Because docker command checks ~/.docker/ folder for TLS keys/certificates by default. So if you place your TLS files under ~/.docker/ directory, you don't have to set DOCKER_CERT_PATH.

So, that's all for this post. I hope it might be helpful for anyone who wants to understand details about accessing a remote Docker host.